Progressive Misconceptions

Last week, my colleague1 Nolan Lawson wrote a lengthy post about his struggles with progressive enhancement. In it, he identified a key tension between the JavaScript community and the progressive enhancement community that has, frankly, existed since the term “progressive enhancement” was coined some 13 years ago. I wanted to take a few minutes to tuck into that tension and assure Nolan and other folks within the JS community that neither progressive enhancement nor the folks who advocate it (like me) is at odds with them or their work.

But first let’s take a a trip back in time to 2003. In March of that year, Steve Champion introduced a concept he called “progressive enhancement”. It caused a bit of an upheaval at the time because it challenged the dominant philosophy of graceful degradation. Just so we’re all on the same page, I’ll compare these two philosophies.

What’s graceful degradation?

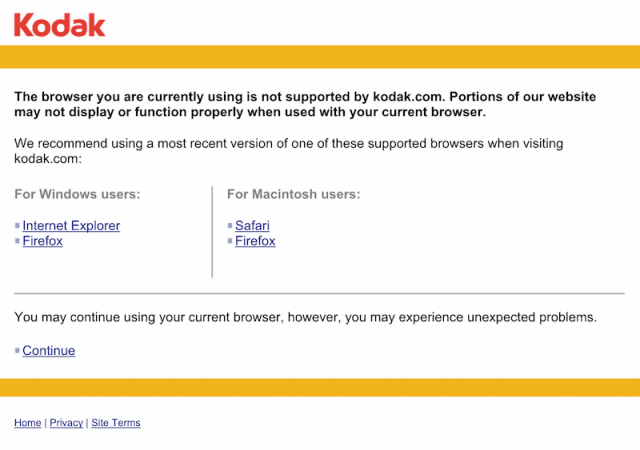

Graceful degradation assumes that an experience is going to be worse on older, less capable browsers and devices. To address potential problems, it recommends that developers take steps to avoid throwing errors—JavaScript or otherwise—for their users. Under this philosophy, a developer can take a range of approaches ranging from making everything work perfectly in down-level browsers to only addressing egregious errors or even chosing to block certain browsers from accessing the content if they are known to have problems. We saw this latter approach often with Flash-only sites, but it wasn’t limited to them. I used this “roadblock” example from Kodak.com in my book:

Overall, graceful degradation is about risk avoidance. The problem was that it created a climate on the Web where we, as developers, got comfortable with the idea of denying access to services (e.g., people’s bank accounts) because we deemed a particular browser (or browsers) too difficult to work with. Or, in many cases, we just didn’t have the time or budget (or both) to address the broadest number of browsers. It’s kind of hard to reconcile the challenge of cross-browser development in 2003 with what we are faced with today as we were only really dealing with 2-3 browsers back then, but you need to remember that standards support was far worse at the time.

So what’s progressive enhancement?

In his talk, Steve upended the generally shared perspective that older browsers deserved a worse experience because they were less technically capable. He asked us to look beyond the browsers and the technologies in play and focus on the user experience, challenging us to design inclusive experiences that would work in the broadest of scenarios. He asked that we focus on the content and core tasks in a given interface and then enhance the experience when we could. We accomplish this by layering experiences on top of one another, hence “progressive enhancement”.

What’s particularly interesting about this approach is that it is still technically graceful degradation because all of the interfaces do gracefully fall back to a usable state. But it’s graceful degradation at its best, focused on delivering a good experience to everyone. No excuses.

To give a simple example, consider a form field for entering your email address. If we were to mark it up like this

<input type="email" name="email" id="email" />I automatically create layers of experience with no extra effort:

- Browsers that don’t understand “email” as a valid

inputtype will treat the “email” text as a typo in my HTML (like when you type “rdio” instead of “radio”… or maybe I’m the only one that does that). As a result, they will fall back to the default input type of “text”, which is usable in every browser that supports HTML2 and up. - Browsers that consider “email” a valid

inputtype will provide one (or more) of many potential enhanced experiences:- In a virtual keyboard context, the browser may present a keyboard that is tailored toward quickly entering email addresses.

- In a browser that supports auto-completion, it may use this as a cue to suggest entering a commonly-entered email or one that has been stored in the user’s profile.

- In a browser that supports HTML5 validation, the browser may validate this field for proper email formatting when the user attempts to submit the form.

- In a browser that does not support HTML5 validation or that doesn’t actively block submission on validation errors—like Safari—a developer-supplied JavaScript program may use the

typeattribute as a signal that it should validate the field for proper email address formatting.

That means that there are between 5 and 13 potential experiences (given all of the different possible combinations of these layers) in this one single single element… it’s kind of mind-boggling to think about, right? And the clincher here is that any of these experiences can be a good experience. Heck for nearly 15 years of the Web, the plain-ol’ text input was the only way we had for entering an email address. Anything better than that is gravy.

Progressive enhancement embraces the idea of experience as a continuum rather than some singular ideal. It recognizes that every person is different and we all have special requirements for Web access. Some may depend on our browser, the device we’re on, and the network we are using. Others may be the result of a limitation we have dealt with since birth, are dealing with temporarily as the result of an injury or incident, or are simply a factor of our current situation. We all experience the world differently and progressive enhancement not only respects that, it embraces that variability.

How does it do this? Progressive enhancement takes advantage of the fault tolerant nature of HTML and CSS. It also uses JavaScript’s own ability to test for browser features to tailor programmatic enhancements to the given device and situation. That’s right: progressive enhancement and JavaScript go hand-in-hand.

Why are so many JavaScript folks hostile to progressive enhancement?

As a member of the JavaScript community for over a decade now, I have theory for why many JavaScript developers are so antagonistic toward progressive enhancement. Part of it has to do with history and part of it has to do with programming culture. Let’s tackle the history first.

When progressive enhancement was first proposed, the Web was getting more standardized, but things were still a bit of a mess… especially in the JavaScript world. Many JavaScript programs were poorly-written, contained lots of browser-specific code, and were generally unfriendly to anyone who fell outside of the relatively narrow band of “normal” Web use… like screen reader users, for example. It’s not surprising though: Graceful degradation was the name of the game at the time.

Because JavaScript programs were creating barriers for users who just wanted to read news articles, access public services, and check their bank accounts, many accessibility advocates recommended that these folks disable JavaScript in their browsers. By turning off JavaScript, the theory went, users would get clean and clear access to the content and tasks they were using the Web for. Of course that was in the days before Ajax, but I digress.

This recommendation served as a bit of a wake-up call for many JavaScript developers who had not considered alternate browsing experiences. Some chose to write it off and continued doing their own thing. Others, however, accepted the challenge of making JavaScript more friendly to the folks who relied on assistive technologies (AT). Many even went on to write code that actually improved the experience specifically for folks who are AT-dependent. Dojo and YUI, though sadly out of favor these days, were two massive libraries that prioritized accessibility. In fact, I’d go so far as to say they ushered in a period of alignment between JavaScript and accessibility.

Even though JavaScript and accessibility are no longer at odds (and really haven’t been for the better part of a decade), there are still some folks who believe they are. People routinely come across old articles that talk about JavaScript being inaccessible and they turn around and unfairly demonize JavaScript developers as unsympathetic toward folks who rely on screen readers or other AT. It’s no wonder that some JavaScript developers become immediately defensive when the subject of accessibility comes up… especially if it’s not something they’re all that familiar with.

I also mentioned that programming culture plays a part in the antagonistic relationship between the progressive enhancement camp and the JavaScript community. If you’ve been a programmer for any amount of time, you’ve probably borne witness to the constant finger-pointing, belittling, and arrogance when it come to the languages we choose to program in or the tools we use to do it.

As a programmer, you receive a near constant barrage of commentary on your choices… often unsolicited. You’re using PHP? That’s so 1996! You’re still using TextMate?! You still use jQuery? How quaint! I’m not exactly sure where this all began, but it’s unhealthy and causes a lot of programmers to get immediately defensive when anyone challenges their language of choice or their process. And this hostile/defensive environment makes it very difficult to have a constructive conversation about best practices.

Progressive enhancement should not be viewed as a challenge to JavaScript any more than concepts like namespacing, test driven development, or file concatenation & minification are; it’s just another way to improve your code. That said, progressive enhancement does introduce a wrinkle many for hardcore JavaScript programmers seem unwilling to concede: JavaScript is fragile. At least on the client side, JavaScript development requires far more diligence when it comes to error handling and fallbacks than traditional programming because, unlike with traditional software development, we don’t control the execution environment.

Douglas Crockford (in)famously declared the Web “the most hostile software engineering environment imaginable” and he wasn’t wrong. A lot of things have to go right for our code to reach our users precisely the way we intend. Here are just a few of these requirements:

- Our code must be bug-free;

- Included 3rd party code must be bug free and must not interfere with our code;

- Intermediaries—ISPs, routers, etc.—must not inject code or if they do, it must be bug free and not interfere with our code;

- Browser plugins must not interfere with our code;

- The browser must support every language feature and API we want to use; and

- The device must have enough RAM and a fast enough processor to run our code.

Some of these can be addressed by programming defensively using test-driven development, automated QA testing, feature detection, and markup detection. These aren’t guaranteed to catch everything—markup can change after a test has run but before the rest of the code executed, JavaScript objects are mutable meaning features can accidentally disappear, etc.—but they are incredibly helpful for creating robust JavaScript programs. You can also run your projects under HTTPS to avoid intermediaries manipulating your code, though that’s not fool-proof either.

The devices themselves, we have no control over. It’s not like we can send a new device to each and every user (or prospective user) we have just to ensure they have the appropriate hardware and software requirements to use our product.2 Instead, we need to write JavaScript programs that play well in a multitude of of scenarios (including resource-limited ones).

And, of course, none of this addresses network availability. In many instances, a user’s network connection has the greatest impact on their experience of our products. If the connection is slow (or the page’s resources are exceptionally large) the page load experience can be excruciatingly painful. If the connection goes down and dependencies aren’t met, the experience can feel disjointed or may be flat out broken. Using Service Worker and client-side storage (indexedDB and Web Storage) can definitely help mitigate these issues for repeat visits, but they don’t do much to help with initial load. They also don’t work at all if your JavaScript program doesn’t run. Which brings me to my last point.

When you love a language like JavaScript (as I do), it can be difficult to recognize (or even admit) it’s shortcomings, but recognizing them is part of becoming a better programmer. The Web is constantly evolving and our understanding of the languages we use to build it expands as fast as—or often faster than—their capabilities do. As such, we need to remain open to new and different ways of doing things. Change can be scary, but it can also be good. Being asked to consider a non-JavaScript experience shouldn’t be seen as an affront to JavaScript, but rather a challenge to create more robust experiences. After all, our last line of defense in providing a good user experience is providing one with the least number of dependencies. That’s what progressive enhancements asks us to do.

JavaScript & PE kissing in a tree?

All of this is to say I don’t think JavaScript and progressive enhancement are diametrically opposed and I don’t think folks who love the JavaScript language or tout the progressive enhancement philosophy should be either. Together they have the potential to making the Web the best it can possibly be.

Progressive enhancement’s focus on providing a baseline experience that makes no assumptions about browser features will provide a robust foundation for any project. It also guides us to be smarter about how we apply technologies like HTML, CSS, JavaScript and ARIA by asking us to consider what happens when those dependencies aren’t met.

JavaScript absolutely makes the user experience better for anyone who can benefit from it. It can make interfaces more accessible. It can help mitigate networking issues. It can create smoother, more seamless experiences for our users. And it can reduce the friction inherent in accomplishing most tasks on the Web. JavaScript is an indispensable part of the modern Web.

In order to come together, however, folks need to stop demonizing and dismissing one another. Instead we need to rally together to make the Web better. But before we can do that, we need to start with a common understanding of the nature of JavaScript. The progressive enhancement camp needs to concede that all JavaScript is not evil, or even bad—JavaScript can be a force for good and it’s got really solid support. The JavaScript camp needs to concede that, despite its ubiquity and near universal support, we can never be absolutely guaranteed our JavaScript programs will run.

I fully believe we can heal this rift, but it’s probably gonna take some time. I fully intend to do my part and I hope you will as well.

Update: This post was updated to clarify that graceful degradation can take many forms and to explicitly tie progressive enhancement and graceful degradation together.

Footnotes

Full disclosure: We both work at Microsoft, but on different teams. ↩︎

It’s worth noting that one company, NursingJobs, actually did this. ↩︎

Webmentions

-

"progressive enhancement and JavaScript go hand-in-hand" - @AaronGustafson aaron-gustafson.com/notebook/progr…

-

Thanks for sharing. I appreciate the historical perspective and agree that the rift needs to be healed.

-

on culture side, I thought graceful degradation was supposed to be “do less down level but DON’T block access”

-

I definitely disagree with your description of graceful degradation. It is degradation without grace.

-

Nice post! Thank you.

-

I always think of it as a phone number to call if you can't use our Web site @AaronGustafson

-

Super article by @AaronGustafson on progressive enhancement, accessibility and JavaScript, and we CAN have it all. aaron-gustafson.com/notebook/progr…

-

Progressive Misconceptions aaron-gustafson.com/notebook/progr… my colleague @AaronGustafson trying to explain the problems with Progressive Enhancement

-

Powerful piece by @AaronGustafson on why progressive enhancement and JS go hand in hand: Progressive Misconceptions aaron-gustafson.com/notebook/progr…

-

"PE is a way to improve your code; UX as a continuum." This, and many other great takeaways: aaron-gustafson.com/notebook/progr… by @AaronGustafson

-

Another great read for you web nerds thinking about progressive enhancement/SPAs/PWAs/etc: aaron-gustafson.com/notebook/progr… (HT -> @AaronGustafson)

-

Good thoughts Aaron. Appreciate your voice on the matter

-

Thanks!

-

good post!

-

that’s a better summary of everything we were talking about :o)

-

Progressive Misconceptions by @AaronGustafson aaron-gustafson.com/notebook/progr…

-

Thank you Aaron! Also a good read…I like to attack my opinions from all angles! Thanks for sharing…learned lots…

-

I agree with “JavaScript is an indispensable part of the modern Web” and I prefer graceful degradation for the minority

-

I think it happens some too, but then when new devs are labeled as “frameworkistas” it makes me wonder.

-

this is awesome! Thanks!!

-

Amazing reference on PE. "Progressive Enhancement Misconceptions", by @aarongustafson aaron-gustafson.com/notebook/progr…

-

but for everyone one there’s posts like youtu.be/r38al1w-h4k?li… or something from zeldman

-

honestly posts like that are amazing, reassuring and needed they’re thoughtful and considerate.

-

Super article by @AaronGustafson on progressive enhancement, accessibility and JavaScript, and we CAN have it all. aaron-gustafson.com/notebook/progr…

Comments

Note: These are comments exported from my old blog. Going forward, replies to my posts are only possible via webmentions.What I don't see either in Nolan's article or in yours is anyone addressing what seems to me to be the core of the debate: whether or not PE should be the default for most web apps.

We all know the extreme cases where PE is objectively the right tool for the job, e.g. Wikipedia, and other extremes where it's objectively not, e.g. Angry Birds.

But the debate isn't about whether those extremes exist, it's about whether most apps are closer to Wikipedia or closer to Angry Birds on the spectrum.

If you believe most apps are closer to Wikipedia than Angry Birds on the spectrum, then you tend to insist that people should consider PE the default and expect to see good justifications for why it isn't used on a given project.

If you believe most apps are closer to Angry Birds than Wikipedia on the spectrum, then you tend to insist that people should not be required to use PE by default and expect to see good justifications for why it is needed on a given project.

Since the split is based on perceptions of the average use case, an inherently foggy and difficult to measure metric, adherence to one side or the other tends to resemble that of a partisan political divide.

From a psychological standpoint, the whole debate seems not unlike conservatives and liberals debating real political world views. And I think that goes a long way towards explaining why it is so divisive.

That said, I think we do need to settle the question of whether PE should be the default for most projects, or at least carve out some very clear and well agreed upon classes of apps where it is or isn't the right tool for the job, rather than philosophizing about it vaguely back and forth one side against the other.

This is science. We should be able to resolve this with metrics. In my view it's sad that it resembles partisan politics so much.

I’m 100% with you. I had originally intended to discuss when PE is appropriate and when it might not make sense, but it was going to be a fairly big tangent for this piece. I’m working on a follow-up.

I’m also 100% with you on the polarization angle and it has a lot to do with our circles on the Web. The more time we spend within communities that only think/talk/work like us, the more set we become in our ways, staunch we become in our views, and convinced we become that we are right and anyone who thinks differently is wrong. It happens in many areas. Politics and code certainly, but I imagine there’s some heated debate within other groups as well. I see a bit of it in the reef-keeping community and it probably exists in knitting circles too.

It’s incredibly important to expose yourself to new ideas and perspectives. It’s how we learn and it’s how we grow. It’s also how we identify the ideas we share and recognize our similarities rather than focusing on our differences.

This is a great discussion! I agree that we need to find more points of commonality.

Personally, coming from a community that focused on JavaScript, SPAs, and client-side frameworks, it took me a long time to even discover that PE advocates were out there, or that they had interesting things to say. The PE point of view was rarely represented in any of the blogs/meetups/podcasts that formed my education as a webdev.

And that's a shame, because both sides have a lot to teach the other. Consider how long it took for JS frameworks to finally recognize that server-side rendering was a good idea!

As I said in my post, I think the reason there's been so little dialogue is because the subject is a bit of a third rail. To borrow your political analogy, it's like trying to get progressives and conservatives together to talk about reproductive health – good luck having a calm discussion where nobody raises their voice or starts making accusations!

As for the question of the "spectrum" between Angry Birds and Wikipedia, these are definitely the kinds of questions we need to start asking. Laurie Voss also raised this point: http://seldo.com/weblog/201... .

I'm sure that a spectrum exists. To be honest, though, I'm not convinced that even the Wikipedia case is a slam-dunk for the PE side – when I'm using an Android phone, I vastly prefer Jake Archibald's Offline Wikipedia (https://jakearchibald.githu... because it's legitimately a better user experience: works offline, can save articles for later viewing, very fast search, etc. And it's just a typical progressive web app: if you turn off JS, you get an empty app shell.

I watched a talk by Henrik Joreteg recently (https://youtu.be/hrAssE8meRo) that I think does a good job of laying out the use cases for the two different experiences. He points out that Twitter has a hybrid use case – when you land on a Tweet from some other website, you just want it to load fast (don't bootstrap a 2MB JS app just to show 140 characters!) whereas when you go to Twitter.com, you actually want more of a full-fledged app experience.

Part of it is the question of optimizing for first-time visitors vs repeat visitors, and part of it the question of optimizing for the home page experience vs a leaf page experience. But the conflict can exist within the same site.

Another point he makes is that we've largely solved this problem on desktop (the desktop web is winning) whereas it's really only on mobile that we need to rethink our best practices (instead of just giving up and writing a native app, as many are doing). This IMO is where offline-first comes in, which is why I was so gung-ho about it in my blog post.

So maybe if you expect most of your users to come from mobile (where you have a much less reliable network connection), then your default choice should be an offline-first PWA rather than a PE static site. OTOH this might not play well to your team's skill set (if they're stronger in HTML/CSS than in JS), but I'm hopeful that eventually there will be frameworks for PWAs that will make it as easy to build a PWA as it is to build a WordPress site. (One can hope, right!)

Anyway, all interesting discussions. :) Let's keep the conversation going.